Web scraping tools can bring many advantages to users as it’s effective, powerful and can extract data at high speed. Web scraping tools are a great choice to displace the form of manually copying and pasting the data. Despite that, there are still some limitations of these tools in their capabilities and their operation.

What is Web scraping?

Web scraping is a powerful technique deployed to fetch large amounts of data from a particular website. The usage of a web scraper helps to extract unstructured data on websites and store them in a structured form such as a local file in your computer or a database file format.

Web scraping is also called Web data extraction or Web harvesting. The term web scraping usually refers to automated processes implemented using an Internet bot or spider bot.

What is Web scraping used for?

Web scraping is used for various purposes. These include contact scraping, price comparison, SEO monitoring, competitor analysis, gathering real estate lists, social media scraping, brand monitoring, to name but a few. Web scraping can be also used as a component of applications for web indexing or data mining.

What are the limitations of web scraping tools?

Difficult to understand

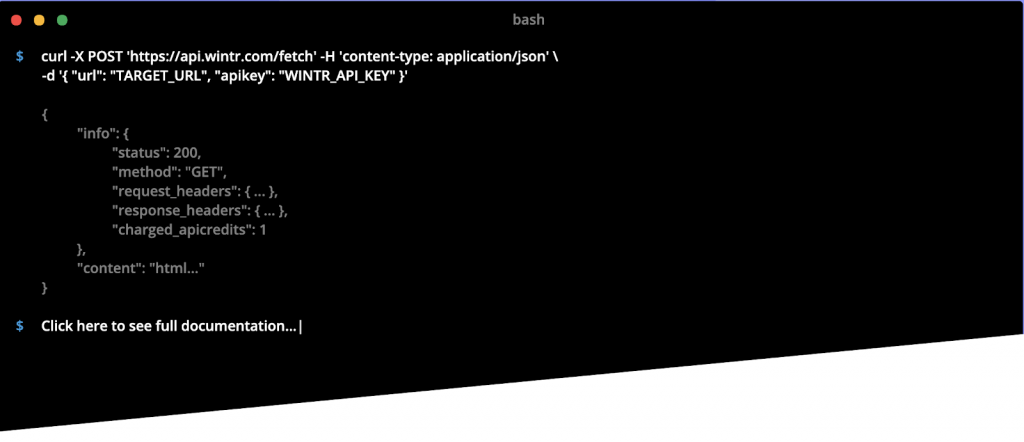

For anyone who is a newbie in this field, scraping processes might be very difficult to understand. There are so much new definition and knowledge they need to know to carry out web scraping with the aid of tools smoothly. Even the simplest scraping tool will take you a lot of time to master. In some cases, many tools still require you to know programming languages and coding skills to use. Some no-code web scraping tools may take users weeks to learn. To perform web scraping successfully, having a grasp of APIs, XPath, HTML, AJAX is necessary.

The structure of the website changes frequently

Websites usually do updates on their content and enhance the user interface to increase the quality of their services and boost the user’s experience. However, even a slight change can disorder your data. In this case, web scraping tools that have been built according to the design of the page at a certain time before would become useless for the upgraded page. Web scraping tools require regular adjustments so that they can adapt to recent changes of the web page since a minor change from the target website can spoil the process.

Getting blocked by search engines or websites

With cutting-edge anti-scraping technologies, it is quite easy to detect online non-human activity. Regular updates on data play an important role in the development of a business. As a result, web scrapers have to regularly access the target website and harvest the data again and again. However, if you send out too many requests from a single IP address and the website has strict rules on scraping, you are likely to get IP blocked.

Large scale extraction is not possible

If your business wants to scale up, it is necessary to develop data harvesting and scrape data on a large scale. However, it is not an easy task. Web scraping tools are built to meet small and one-time data extraction requirements, so they are not able to fetch millions of records.

Complex web page’s structure

This is another limitation of web scraping tools. It is proven that 50% of websites are easy to scrape, 30% are moderate, and the last 20% is rather difficult to extract data from. In the past, scraping HTML web pages was a simple task. However, nowadays, a lot of websites rely heavily on Javascript or Ajax techniques for dynamic content loading. Both of these elements require complex libraries that could hinder web scrapers in getting data from such websites.