Duplicate content is a big problem for search engines like Google. In simple words, duplicate content happens when a writer uses the same content on different locations, i.e., URLs on the web. This makes it difficult for the search engine as it doesn’t know which URL to give rank in the search engine result page (SERP).

Why Should You Try to Fix Duplicate Content?

Duplicate content is a matter of concern for both search engines and website owners. Let’s see how?

Search Engines

You need to be aware of three major issues:

- Which version to include or exclude from the indices?

- Do you wish to direct the link metrics like trust, link equality, authority, anchor text etc. to one page or keep it separated between multiple pages?

- Which page to rank for the search results?

Website Owners

The site owners suffer rankings and lose traffic due to the presence of duplicate content on the website. Google will rarely show multiple versions of the content, thus diluting the visibility of each version. Other websites also have to choose between the duplicates. Instead of pointing all the inbound links to a single piece of content, link it with multiple contents. At the end of the day, with the help of duplicate pages, it is possible to evaluate the authenticity of the targeted page. Inbound links being a significant factor can certainly impact the search visibility of the content.

Causes of Duplicate Content

(Source: yoast.com)

Google’s Matt Cutts has stated that 25-30% of the web content is duplicate. Let’s check out the causes.

URL Variations

Let’s start with an example,

- http://www.stylenow.com/products/clothing.aspx

- http://www.stylenow.com/products/clothing.aspx?category=men&color=black

- http://www.stylenow.com/products/clothing.aspx?category=men&type=shirt&color=black

All the above URLs, in theory, will point to the same content. The difference is some pages are organised and filtered. These sort of URLs are very common in e-commerce websites. These pages can be pointed out as duplicate content by Google if the crawling of duplicate pages is not avoided.

WWW vs NON-WWW

Check the following URLs –

- www.stylenow.com

- stylenow.com

- http://www.stylenow.com

- http://stylenow.com/

- https://www.stylenow.com

- https://stylenow.com

Although the target URL is the same in all cases, search engine bots read this as different URLs. The search engine bot will consider https://www.stylenow.com and http://www.stylenow.com as pages having duplicate content.

Scraped Content

Besides, editorials and blog contents, there are product information pages that are also a part of the content. Duplication in product information pages is very common for eCommerce sites. Moreover, some scrapers republish your content on their website. So that’s another source of duplication.

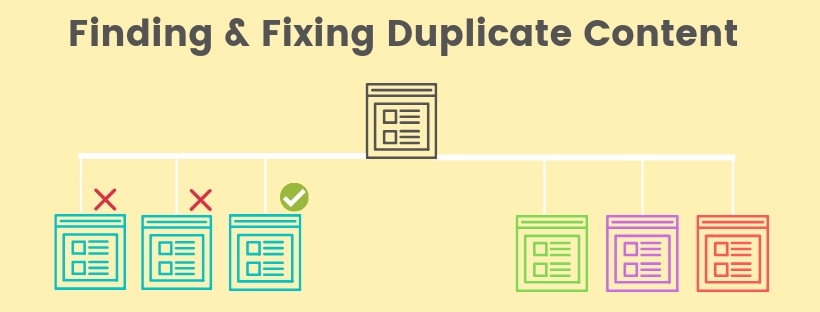

Fixing Duplicate Content

(Source: tomcrowedigital.com)

You need the “correct” one amongst all the duplicates. In case you find multiple URLs using the same content, it is recommended to get it canonicalised for the bots.

Let’s see the three ways to carry out canonicalization:

Rel=canonical attribute

By adding a canonical tag, rel=” canonical ” you tell the search engine that the master copy of a page is represented by a specific URL. So, the search engine must apply the ranking power to the master page.

301 Redirect

A 301 redirect is a permanent redirect which passes over 90% of the link equity to the redirected page, i.e., the original page. In many cases, the 301 redirects are used to combat duplicate content.

Meta Robots Noindex

The meta robots tag is useful in dealing with the issue of duplicate content. The meta robots tag, i.e., content =”noindex,follow” is added to the HTML head of all the pages that need to be excluded from the search engine’s index.

Duplicate Content Checker Tools

Checking for duplicate text is very important as plagiarism is still a big problem. Here’s a list of the duplicate content checkers. Some are free, while others have a paid version too.

COPYSCAPE

Copyscape is a popular tool used as a duplicate content finder. The basic version of Copyscape offers online plagiarism check while the premium is a more powerful plagiarism checker.

SITELINER

Siteliner is a free duplicate content checker that mainly checks the inner pages of a website for plagiarised content. You can also find broken links, page power and other reports.

SMALL SEO TOOLS

It is one of the major duplicate content tools, and it is free. Small SEO Tools offer a range of writing and editing tools which include spelling checker, word counter, rewriting articles, and text case changer.

GRAMMARLY

Although known for grammar checking, Grammarly also has a plagiarism checker. It scans your text and detects plagiarised passages from billions of web pages.

PREPOSTSEO

This is a free duplicate content checker and has a lot of features. It enables you to upload a file directly to scrutinise the level of duplication. This helps if you want to check your writeup in different formats like pdf, doc, docs or text. The tool also comes as a chrome extension.

QUETEXT

Nearly 2 million users are using Quetext as their plagiarism checker. It uses DeepSearch technology and has a sleek interface. It does its job pretty accurately.

PLAGRAMME

The tool supports eighteen languages, including English, French, German, Russian, Spanish and others. This tool is more prevalent among students and educators.

BIBME

Bibme checks for missing citations and unintentional plagiarism. It improves sentence structure, grammar and writing styles. It annexes accurate citations and a bibliography directly to your paper.

WEBCONFS

The ultimate objective of this tool is to make the search engine optimisation (SEO) effortless. As duplicate content in SEO is fatal, this tool with its plagiarism checker confirms that any writeup presented is meticulously checked.

PLAGIARISMA

Plagiarisma is another useful free duplicate content checker. The tool has a desktop version for Windows and a mobile version for Android, Moodle and Blackberry. It also works as a web tool.

End Of The Line

As you can see from the write-up, it is criminal for companies to use duplicate content on their website. We have also told you about the different ways companies are found guilty of using duplicate content on their websites and the various plagiarism tools that can help you decipher whether the content being published is 100% original or not. This is extremely critical from a business point of view. As a business owner, the amount of money that you spend on SEO activities can get you the right results. If you need assistance for getting your website on top of Google SERPs, contact a prominent company that offers the best SEO Services in Melbourne.

Add Comment